Dear Visitors,

Spirit of the East, the Qilin steps forth! Truth & Inference 6th Anniversary gift box Qilin of the East pre-sale starts on April 28 at 10:30 AM (UTC+8)! Don’t miss out! NetEase Games Store

Here is the link: smartyoudao.com/collections/id…

#IdentityV #6thAnniversary

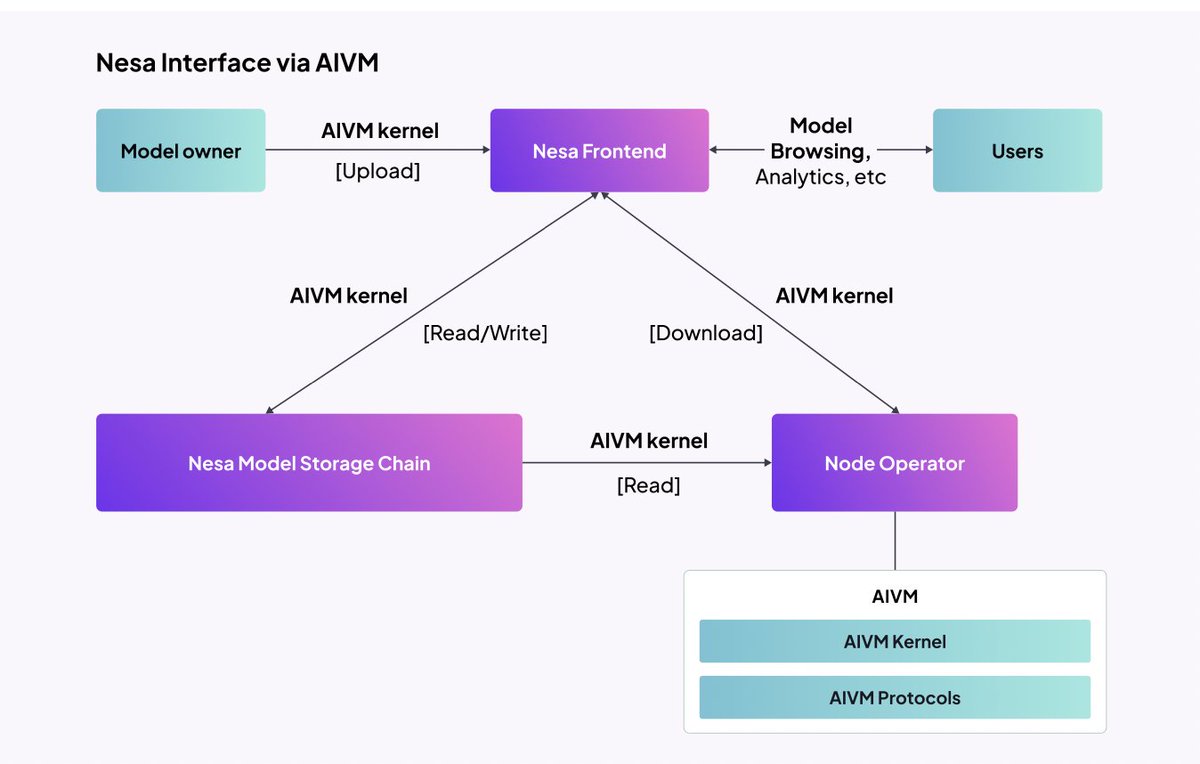

Ready to jump to hyperspace with our accelerators? 🚀

Immerse yourself in the galaxy of #GenAI this May the 4th with the inference and efficiency of #IntelGaudi 3 AI accelerators. #MayThe4thBeWithYou