🌅 🥨🦙PRETTY BOY PRETZEL & A PRETTY SKY! 🌅🦙🥨

#farmstop #farmstop aberdeenshire #farmlife #scottishfarming #aberdeenshire #visitaberdeenshire #visitscotland #farmexperience #alpaca #alpaca lover #alpaca farm #alpaca life #model #poser

2024.2月 貸切

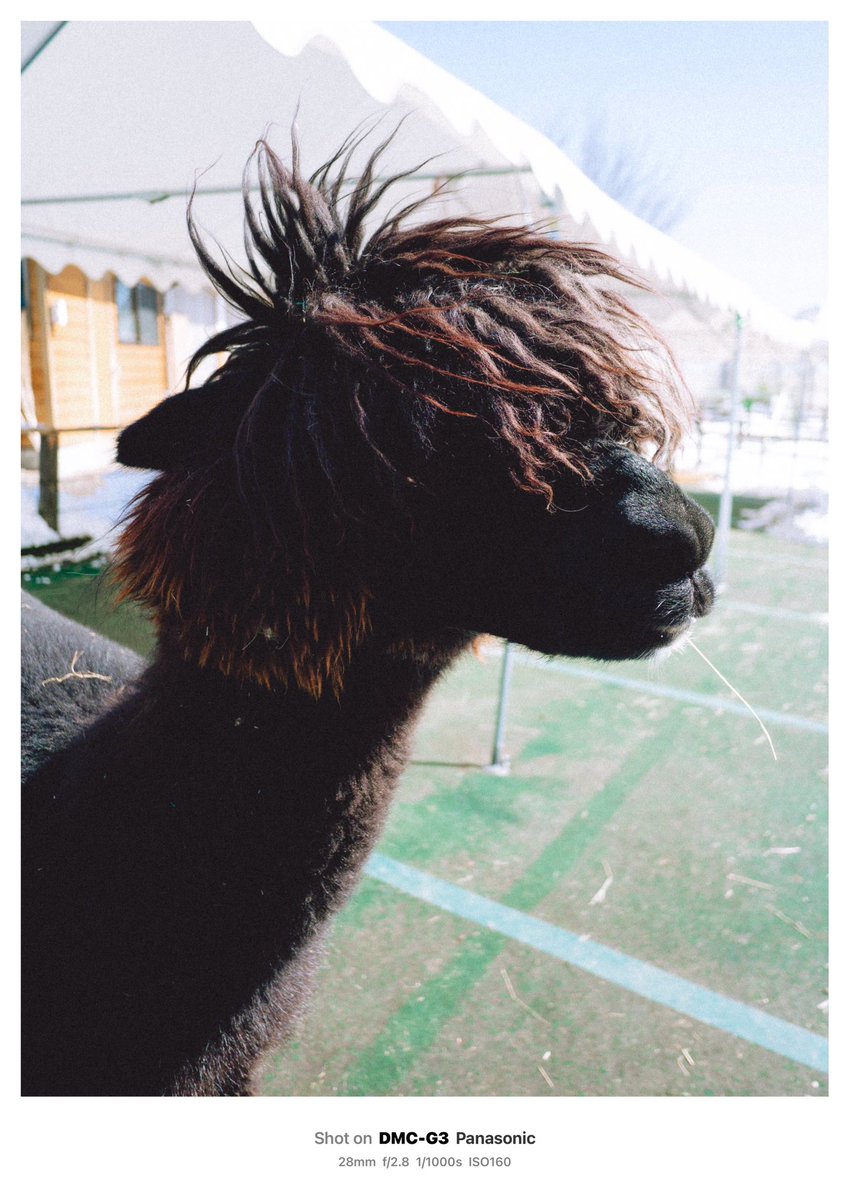

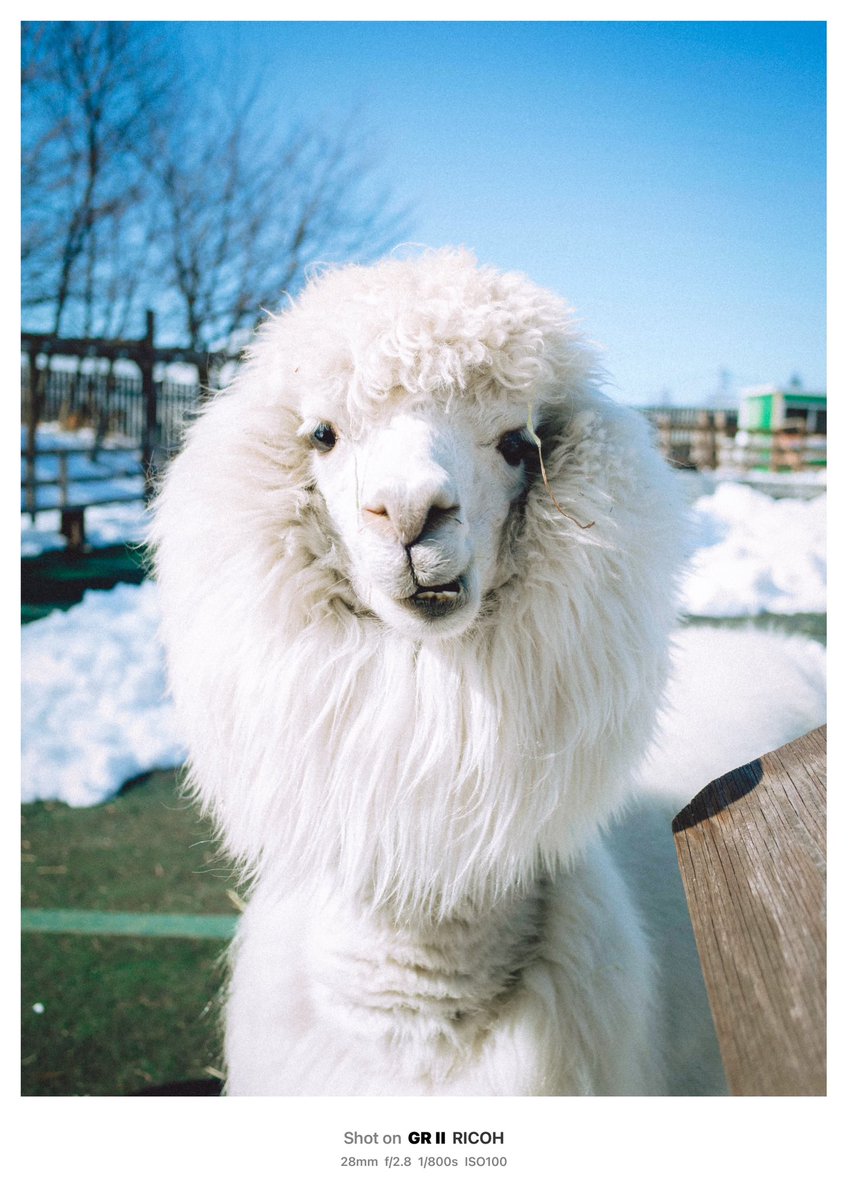

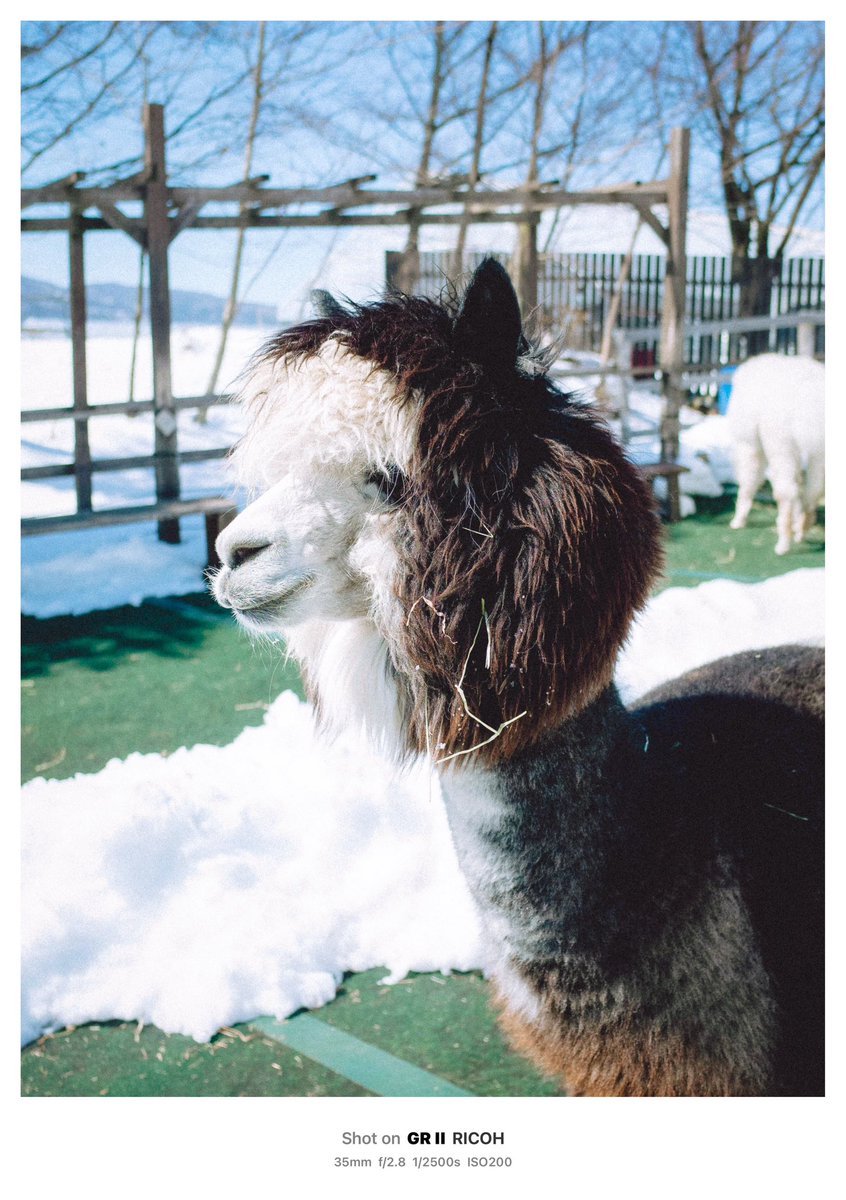

風が強い日は、髪の毛ピーン!な、凛ちゃん。

#八ヶ岳アルパカ牧場 #八ヶ岳アルパカファーム #アルパカ #yatsugatakealpacafarm #alpaca #富士見町 #アルパカ 写真 #alpaca love #alpaca farm #animalphotography #動物写真 #凛 #凛 ちゃん

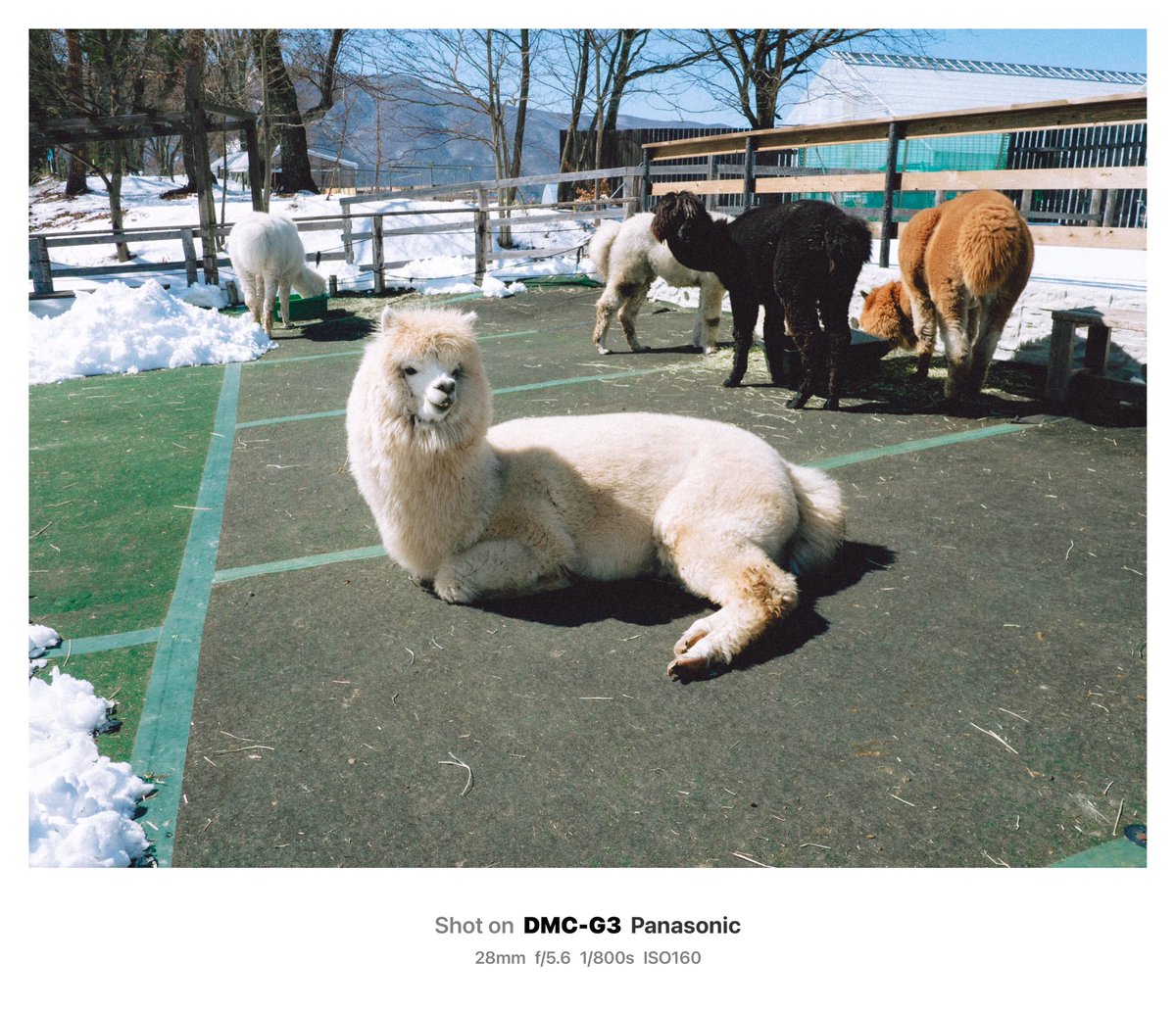

2024.2月 貸切

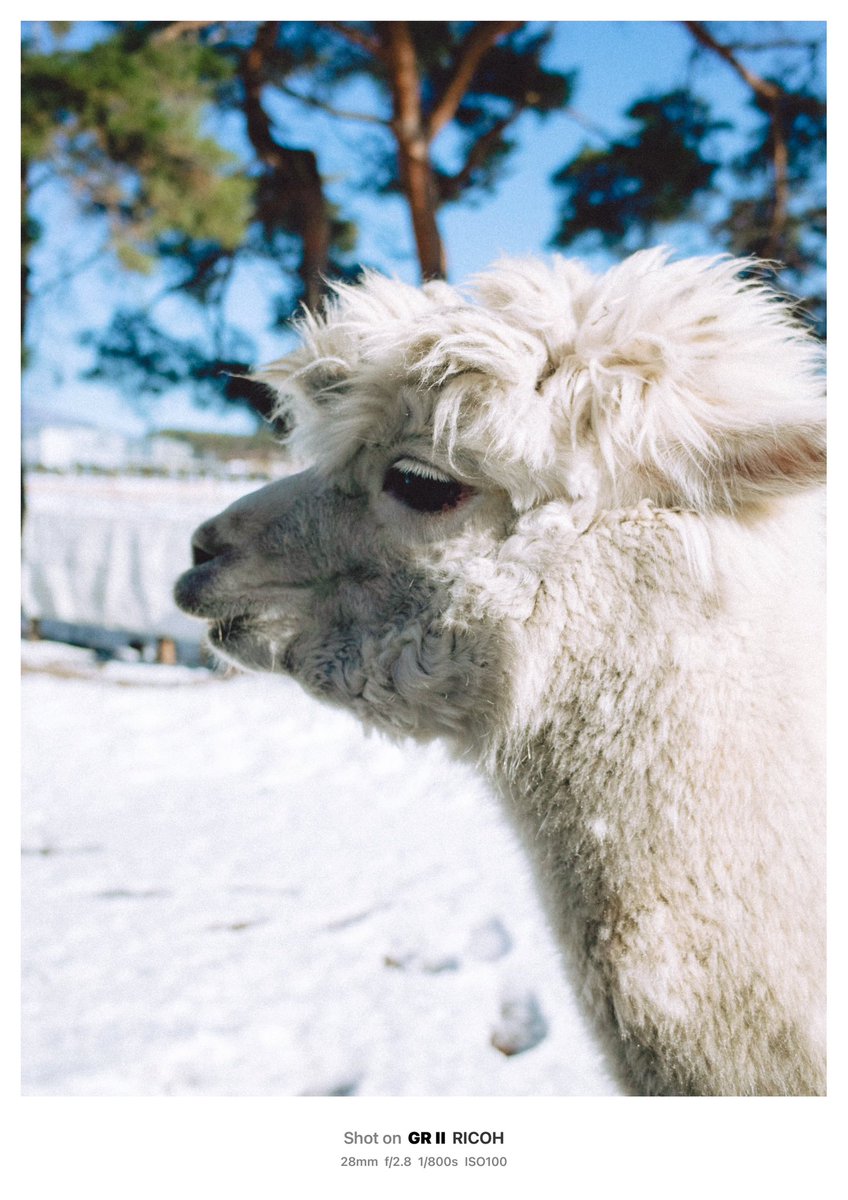

貸切の時は花ちゃんも おやつ欲強め🌱

#八ヶ岳アルパカ牧場 #八ヶ岳アルパカファーム #アルパカ #yatsugatakealpacafarm #alpaca #富士見町 #アルパカ 写真 #alpaca love #alpaca farm #animalphotography #動物写真 #花ちゃん #おはなはん

2024.2月 貸切

さっちゃん、ツルツルに???

#八ヶ岳アルパカ牧場 #八ヶ岳アルパカファーム #アルパカ #yatsugatakealpacafarm #alpaca #富士見町 #アルパカ 写真 #alpaca love #alpaca farm #animalphotography #動物写真 #さつき #サツキ #さっちゃん

The Alpacas: Cute, Colorful, and Eco-Friendly!

#alpaca #alpaca s #cuteanimals #alpaca farm #alpaca world #alpaca love #animals hort #animals #education #education alvideo #naturedocumentary #wildlife #animalfacts

2024.2月 貸切

セクシーみつは☘️

#八ヶ岳アルパカ牧場 #八ヶ岳アルパカファーム #アルパカ #yatsugatakealpacafarm #alpaca #富士見町 #アルパカ 写真 #alpaca love #alpaca farm #animalphotography #動物写真 #みつは #みっちゃん

What a beautiful view this morning 🥰🌅

#nofilter #alpacafarm #walkingwithalpacaslincolnshire #visitlincolnshire #alpacacafe #lincolnshireskies

Despite the rain ☔️… these Rohwer Elementary kindergarteners are STILL excited to see some Alpaca’s!! ✌🏻❤️🦙 #FieldTrip #Kindergarten #AlpacaFarm Millard Public Schools MPS Human Resources MPS Elem Ed

2024.2月 貸切

エスペもこの日はおやつに飢えていた。

いつにも増して近かった!笑

#八ヶ岳アルパカ牧場 #八ヶ岳アルパカファーム #アルパカ #yatsugatakealpacafarm #alpaca #富士見町 #アルパカ 写真 #alpaca love #alpaca farm #animalphotography #動物写真 #エスペ ランサ #エスペ #ペラン

#Photo Update: #Woodlands AlpacaFarm , #Cheshire , #England , #Europe

#Photo s from 26/5/23 - flickr.com/photos/coaster…

#Woodlands #Alpaca Farm #Alpaca #Alpaca s #Animal #Animal s #Farm #Thelwall #Britain #GreatBritain #UnitedKingdom

Flat Oscar visited an Alpaca Farm and it was a blast seeing all of the furry friends. 🦙🧡🦙

#flatoscar

#crescentmoonranch #alpacafarm