Books to Become a Machine Learning Engineer! #BigData #Analytics #DataScience #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #Java Script #ReactJS #GoLang #CloudComputing #Serverless #DataScientist #Linux #Books #Programming #Coding #100DaysofCode

geni.us/Become-ML-Engi…

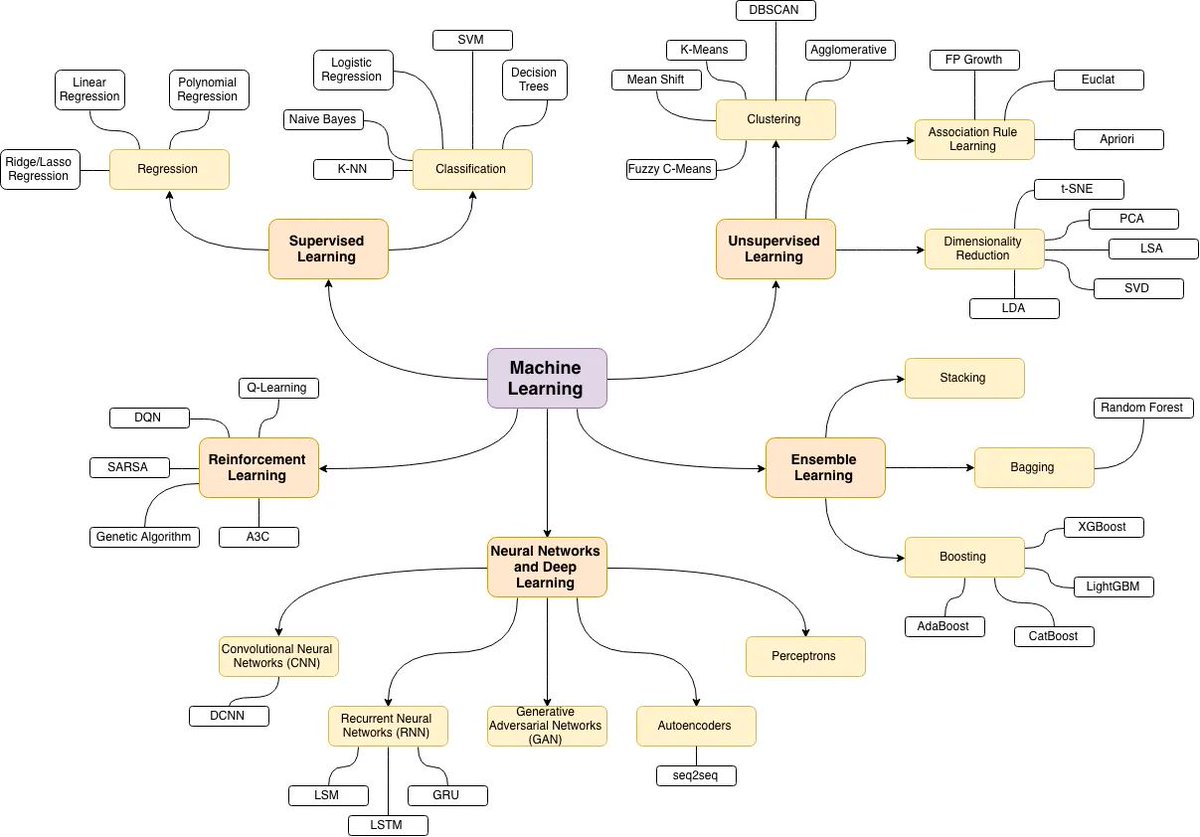

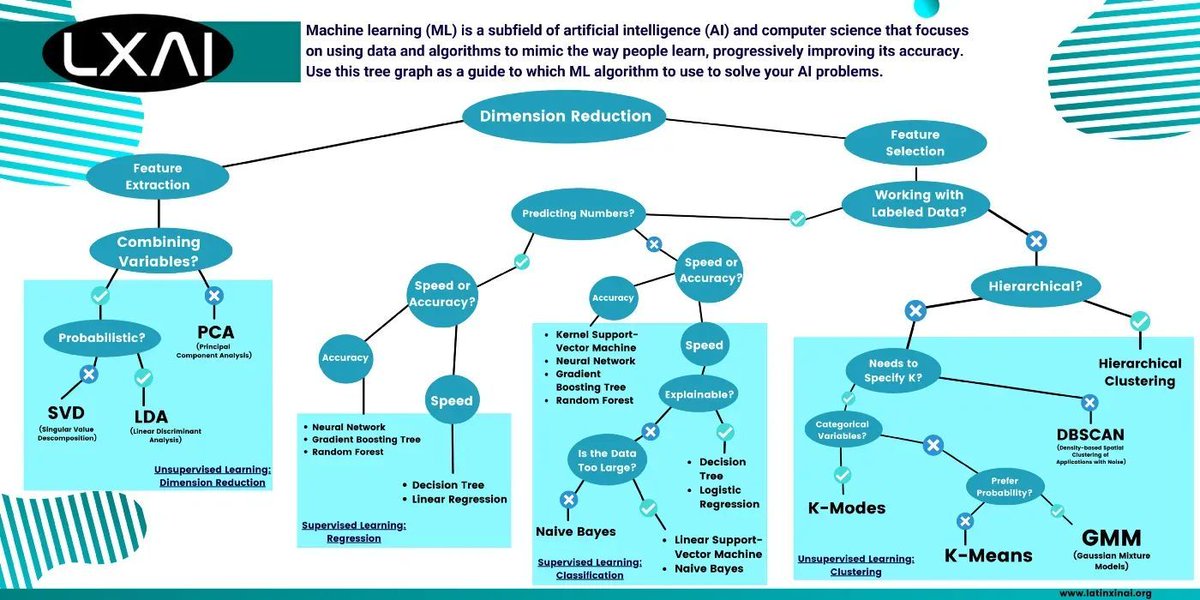

Cheat-Sheet! #MachineLearning #Algorithms ! #BigData #Analytics #DataScience #AI #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #Java Script #ReactJS #GoLang #CloudComputing #Serverless #DataScientist #Linux #Programming #Coding #100DaysofCode

geni.us/ML-Algo-Ch-Sh

Machine Learning with/ R! #BigData #Analytics #DataScience #AI #MachineLearning #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #Java Script #ReactJS #GoLang #CloudComputing #Serverless #DataScientist #Linux #Books #Programming #Coding #100DaysofCode

geni.us/ML-w-R

Top Best Data Science Books to Read! #BigData #Analytics #DataScience #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #Java Script #ReactJS #GoLang #CloudComputing #Serverless #DataScientist #Linux #Books #Programming #Coding #100DaysofCode

geni.us/Top-DScien-Boo…

#PyTorch vs #TensorFlow : Choosing the Right Deep Learning Framework! #BigData #Analytics #DataScience #AI #MachineLearning #IoT #IIoT #Python #RStats #Java #GoLang #CloudComputing #Serverless #DataScientist #Linux #Programming #Coding #100DaysofCode

geni.us/PyTorch--TF

Intro: Statistical Independence! #BigData #Analytics #DataScience #AI #MachineLearning #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #Java Script #ReactJS #GoLang #CloudComputing #Serverless #DataScientist #Linux #Programming #Coding #100DaysofCode

geni.us/Stats-Independ…

GitHub repository with everything you need to become proficient in #PyTorch , with 15 implemented projects: github.com/Coder-World04/… — compiled by Naina Chaturvedi

➕

See this book: amzn.to/3eC3x2p

——

#DataScience #DataScientists #AI #MachineLearning #Python #DeepLearning

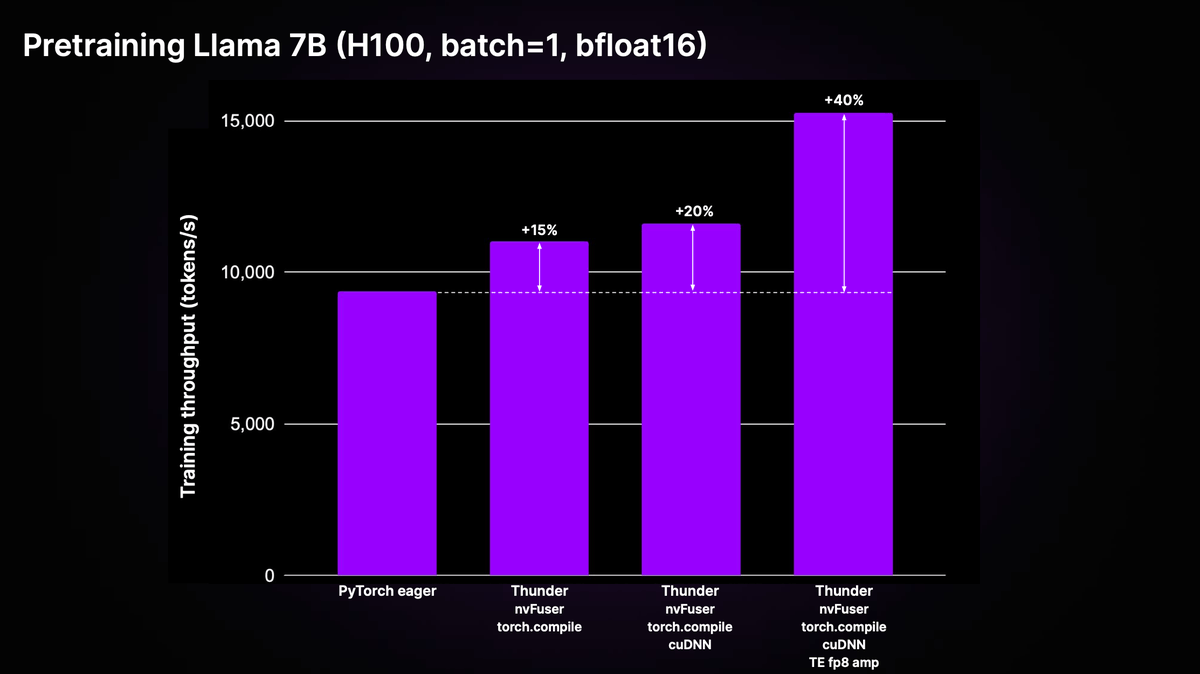

✨ Thunder lib from Lightning AI ⚡️ looks great - can achieve 40% speedup in training (over standard PyTorch eager code.) throughput compared to eager code on H100 using a combination of executors including nvFuser, `torch.compile`, cuDNN, and TransformerEngine FP8.

📌 Supports…

Top Books Every Data Engineer Should Know. #BigData #Analytics #DataScience #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #Java Script #ReactJS #GoLang #CloudComputing #Serverless #DataScientist #Linux #Books #Programming #Coding #100DaysofCode

geni.us/15-Data-Know

Top #MLOps Books to Read! #BigData #Analytics #DataScience #AI #MachineLearning #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #Java Script #GoLang #CloudComputing #Serverless #DataScientist #Linux #Programming #Coding #100DaysofCode

geni.us/Top-MLOps--Boo…

Stanford's CS224 Machine Learning. #BigData #Analytics #DataScience #AI #MachineLearning #IoT #IIoT #PyTorch #Python #RStats #TensorFlow #Java #Java Script #ReactJS #CloudComputing #Serverless #DataScientist #Linux #Programming #Coding #100DaysofCode

geni.us/Stanford-CS-224