Luxi (Lucy) He

@luxihelucy

Princeton CS PhD @PrincetonPLI. Previously @Harvard ‘23 CS & Math.

ID: 1583989164223172608

https://lumos23.github.io/ 23-10-2022 01:10:27

66 Tweet

963 Followers

370 Following

Attending Conference on Language Modeling from 10/6 to 10/9! If you want to chat about GenAI security, privacy, safety, or reasoning (I just started exploring it!), DM me :) & My team at Google AI is looking for interns. Email me ([email protected]) your resume if you are interested.

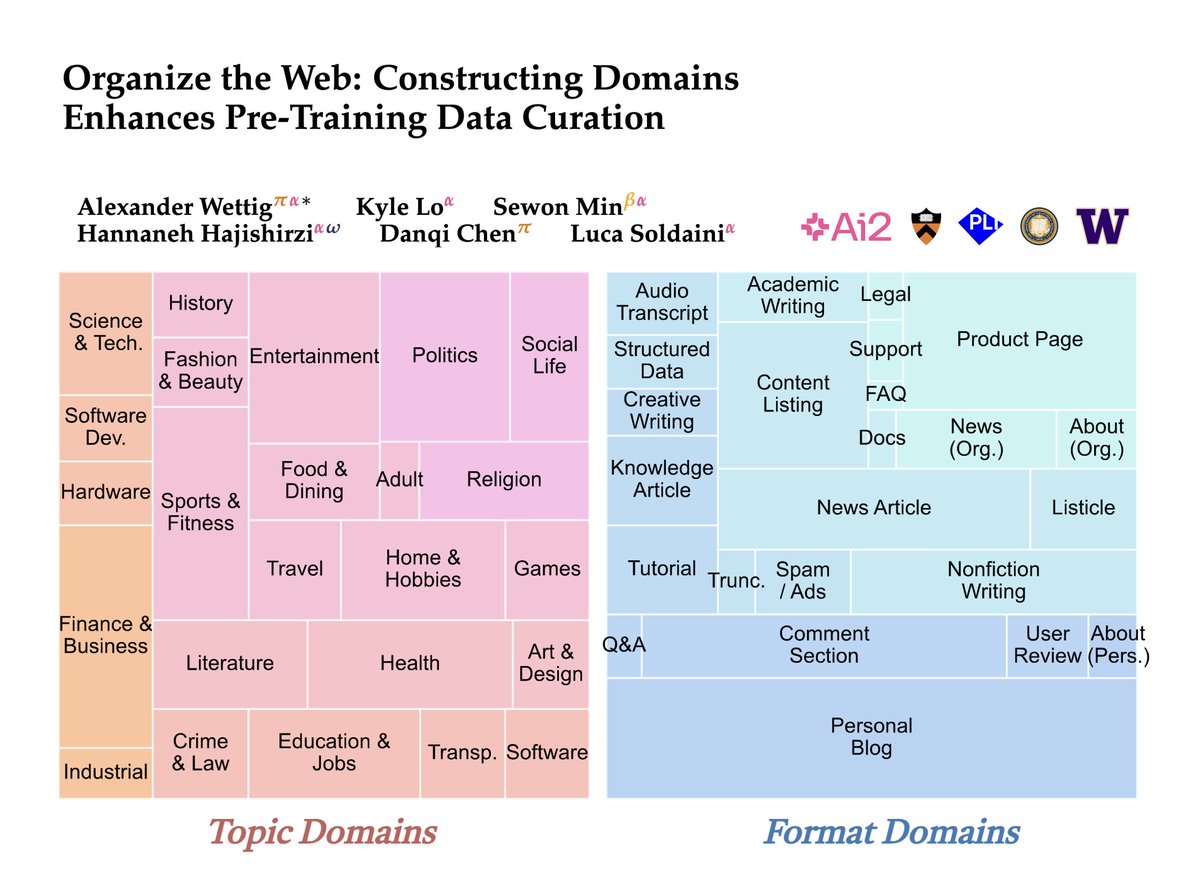

I'm attending Conference on Language Modeling next week! Excited to meet folks and chat about alignment, safety, reasoning, LM evaluations, and more! Please feel free to reach out anytime :) Mengzhou Xia and I will present our work on data selection + safety on Tuesday afternoon, come chat with us!

Join us today at 3 pm ET for a discussion on AI safety and alignment with David Krueger 🤩 Submit your questions in advance at the link in the post!

Tune in to our PASS Seminar with Nathan Lambert next Monday! Submit your question via the link in thread :)

TOMORROW PASS SEMINAR, 12/3 at 1pm ET! Speaker: Percy Liang from Stanford University Live: youtube.com/@PrincetonPLI/… Recordings later at: youtube.com/@PrincetonPLI

Does all LLM reasoning transfer to VLM? In context of Simple-to-Hard generalization we show: NO! We also give ways to reduce this modality imbalance. Paper arxiv.org/abs/2501.02669 Code github.com/princeton-pli/… Abhishek Panigrahi Yun (Catherine) Cheng Dingli Yu Anirudh Goyal Sanjeev Arora

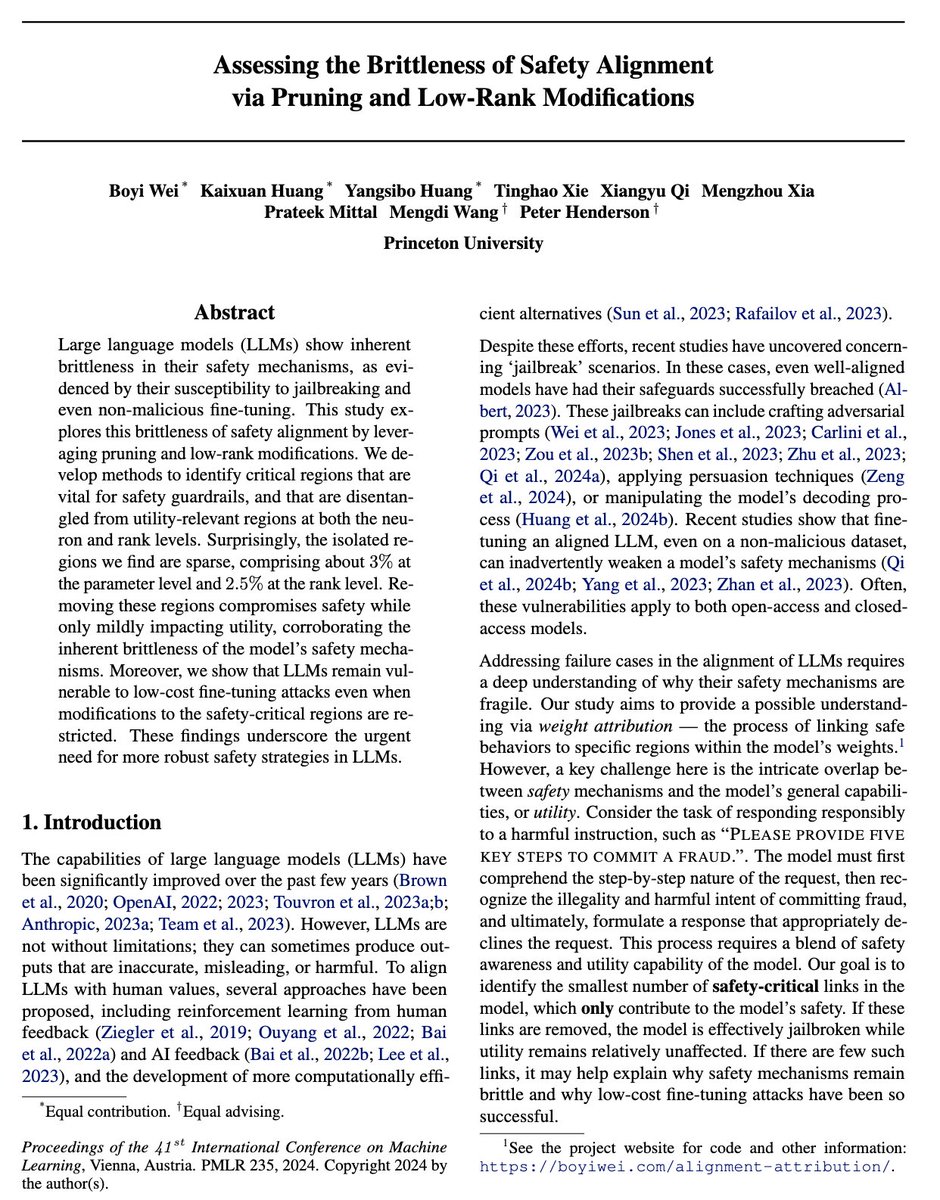

Preserving alignment during customization & fine-tuning is a challenging problem! Here's another work showing how language models can be broadly misaligned by finetuning. If interested, can also check out work from our group by Luxi (Lucy) He Boyi Wei, Xiangyu Qi, & others!