Pete Shaw

@ptshaw2

Research Scientist @GoogleDeepmind

ID: 1075460972

http://ptshaw.com 10-01-2013 02:28:10

85 Tweet

564 Followers

432 Following

Hi ho! New work: arxiv.org/pdf/2503.14481 With amazing collabs Jacob Eisenstein Reza Aghajani Adam Fisch dheeru dua Fantine Huot ✈️ ICLR 25 Mirella Lapata Vicky Zayats Some things are easier to learn in a social setting. We show agents can learn to faithfully express their beliefs (along... 1/3

This was my first time submitting to TMLR, and thanks to the reviewers and AE Alessandro Sordoni for making it a positive experience! TMLR seems to offer some nice pros vs. ICML/ICLR/NeurIPS, eg: - Potentially lower variance review process - Not dependent on conference calendar

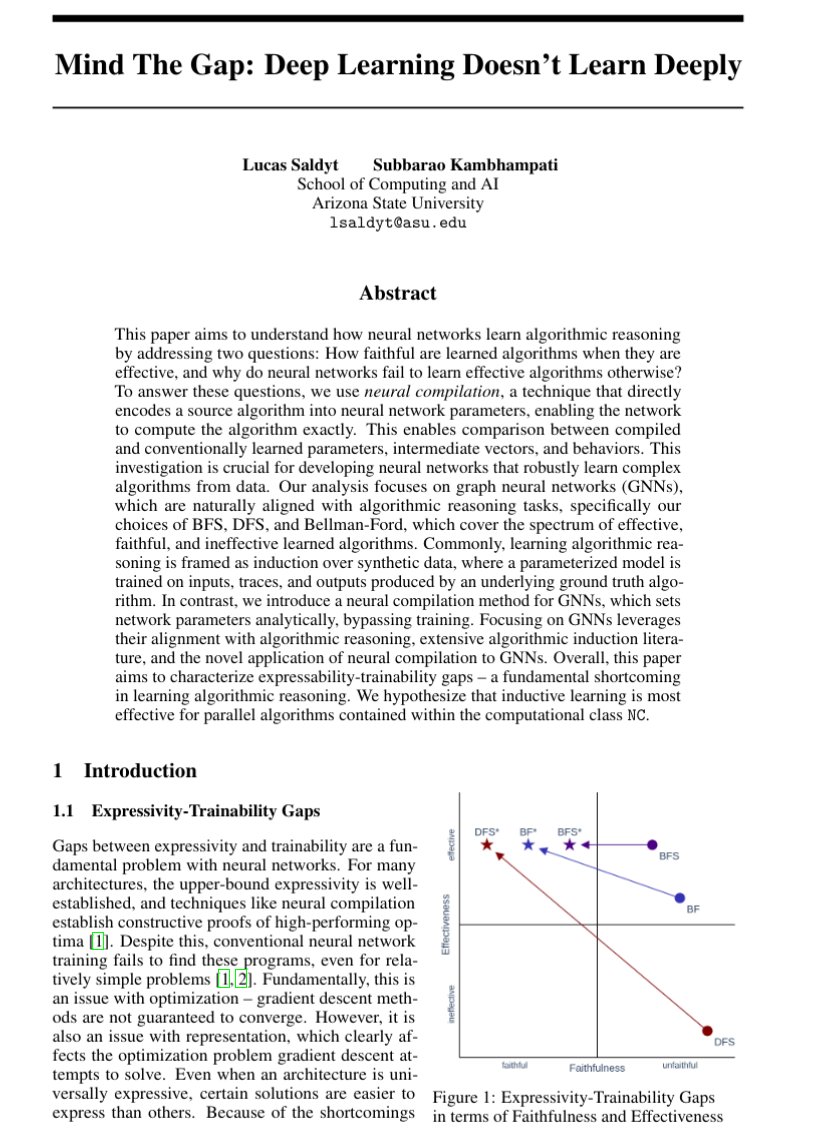

Neural networks can express more than they learn, creating expressivity-trainability gaps. Our paper, “Mind The Gap,” shows neural networks best learn parallel algorithms, and analyzes gaps in faithfulness and effectiveness. Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)

AgentRewardBench will be presented at Conference on Language Modeling 2025 in Montreal! See you soon and ping me if you want to meet up!