Karel D’Oosterlinck

@kareldoostrlnck

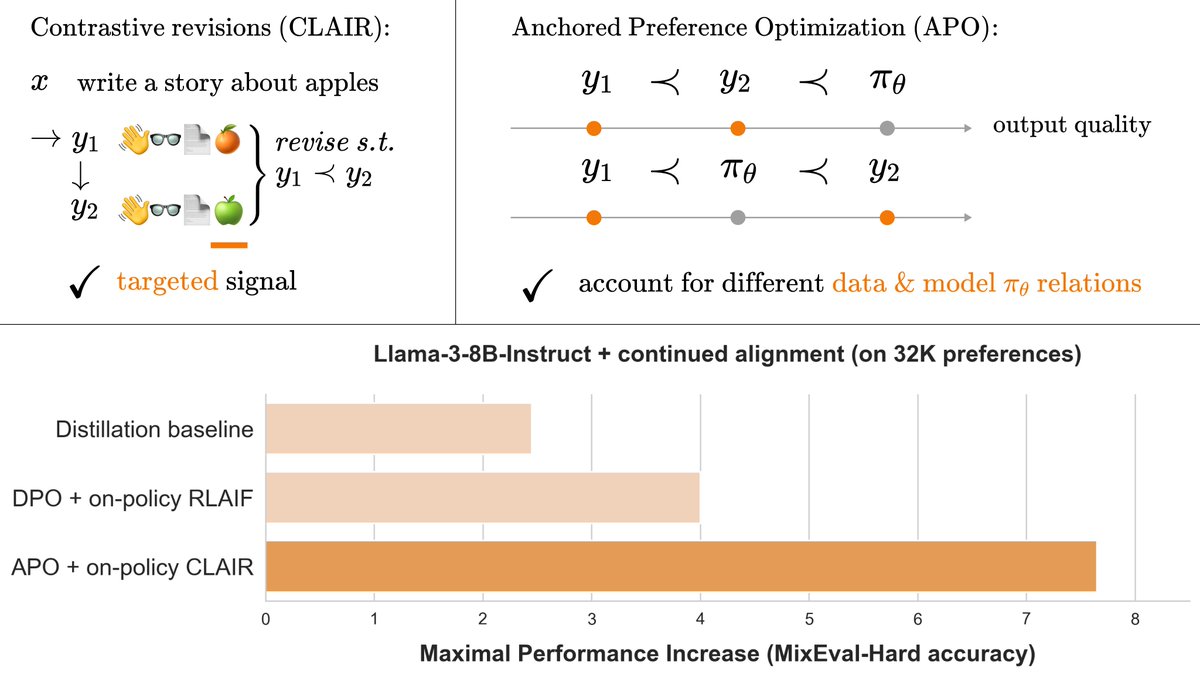

Alignment, Interpretable AI, RAG, Biomedical NLP. Intern @ContextualAI, PhD student @ugent, visitor @stanfordnlp. Instigator of hikes.

ID: 1107032711477235712

16-03-2019 21:35:40

772 Tweet

2,2K Followers

625 Following