Greg Durrett

@gregd_nlp

CS professor at UT Austin. I do NLP most of the time. he/him

ID:938457074278846468

06-12-2017 17:16:17

1,1K Tweets

6,0K Followers

760 Following

✨RECOMP at #ICLR2024 !

Our poster is at ⏰Thursday 10:45am (Halle B #138). Come check out our work & talk to my advisor Eunsol Choi

and collaborator Weijia Shi @ ICLR24 !

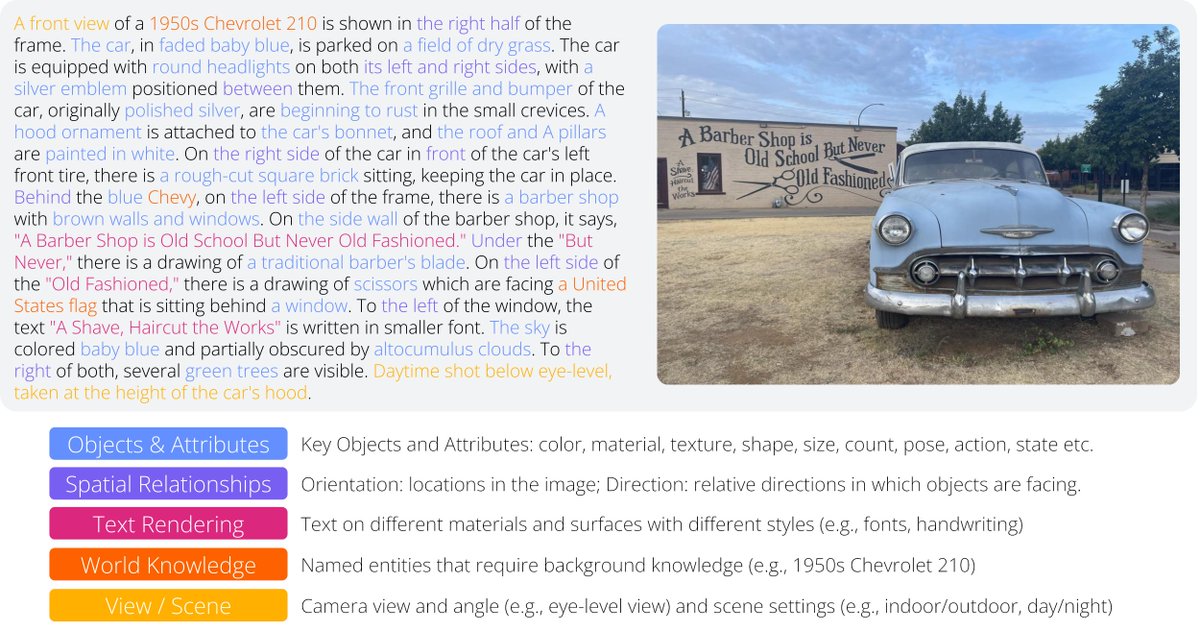

Can LMs correctly distinguish🔎 confusing entity mentions in multiple documents?

We study how current LMs perform QA task when provided ambiguous questions and a document set📚 that requires challenging entity disambiguation.

Work done at Computer Science at UT Austin✨ w/ Xi Ye, Eunsol Choi

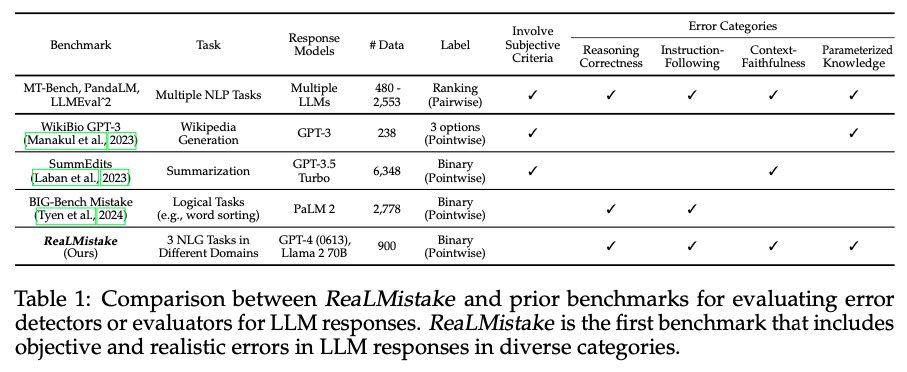

📢 New Preprint! Can LLMs detect mistakes in LLM responses?

We introduce ReaLMistake, error detection benchmark with errors by GPT-4 & Llama 2.

Evaluated 12 LLMs and showed LLM-based error detectors are unreliable!

Rui Zhang Wenpeng_Yin Arman Cohan +

arxiv.org/abs/2404.03602

Greg Durrett Chaitanya Malaviya Abhika Abhika Mishra also led a project where we annotated 1k LLM responses (llama2 7b&70b chat and ChatGPT) to diverse instruction following prompts with span level hallucinations and types.

The data is publicly available!