Loïck BOURDOIS

@bdsloick

FAT5 boy

@huggingface Fellow 🤗

ID: 1446784639113306118

https://lbourdois.github.io/blog/ 09-10-2021 10:28:50

253 Tweet

223 Takipçi

180 Takip Edilen

🤗 Sentence Transformers is joining Hugging Face! 🤗 This formalizes the existing maintenance structure, as I've personally led the project for the past two years on behalf of Hugging Face. I'm super excited about the transfer! Details in 🧵

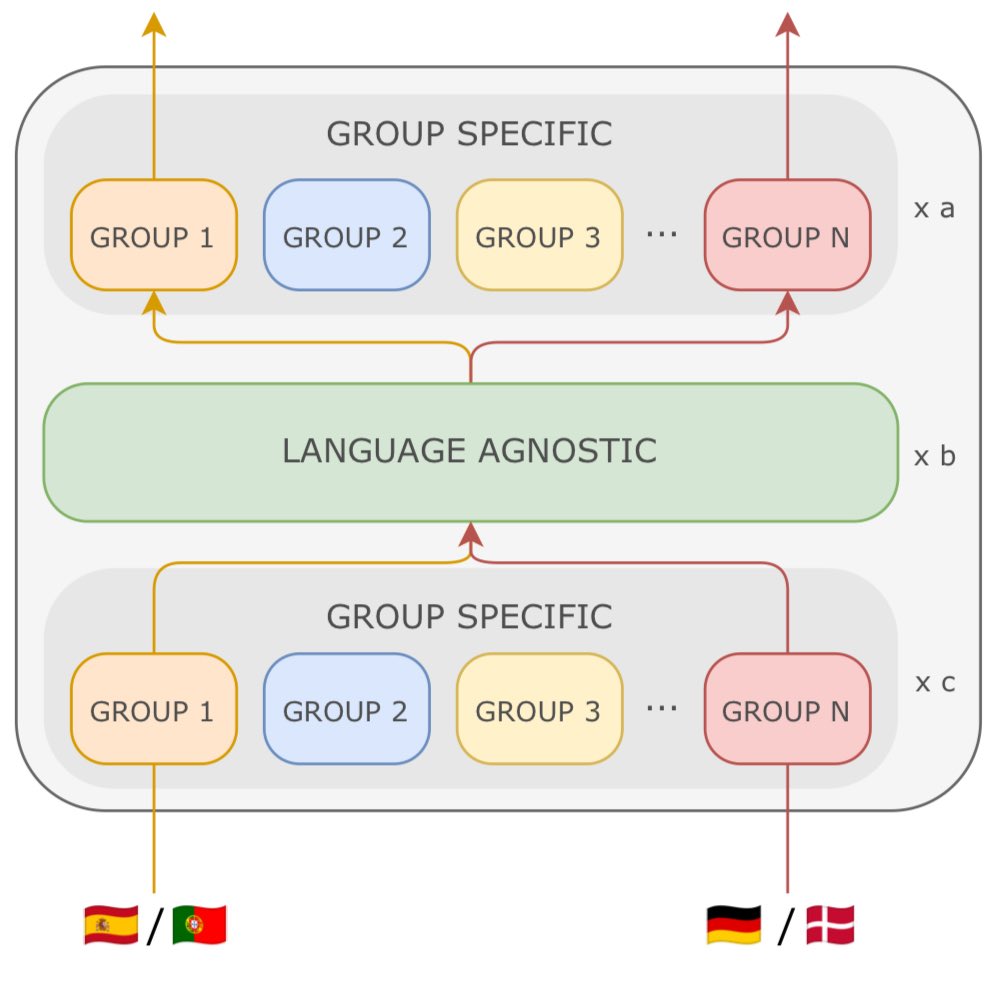

🚨New Paper AI at Meta 🚨 You want to train a largely multilingual model, but languages keep interfering and you can’t boost performance? Using a dense model is suboptimal when mixing many languages, so what can you do? You can use our new architecture Mixture of Languages! 🧵1/n