Naomi Saphra

@nsaphra

Waiting on a robot body. ML/NLP. All opinions are universal and held by both employers and family. Same username on every lifeboat off this sinking ship.

ID:215113195

http://nsaphra.github.io/ 13-11-2010 01:15:56

17,0K تغريدات

7,2K متابعون

1,2K التالية

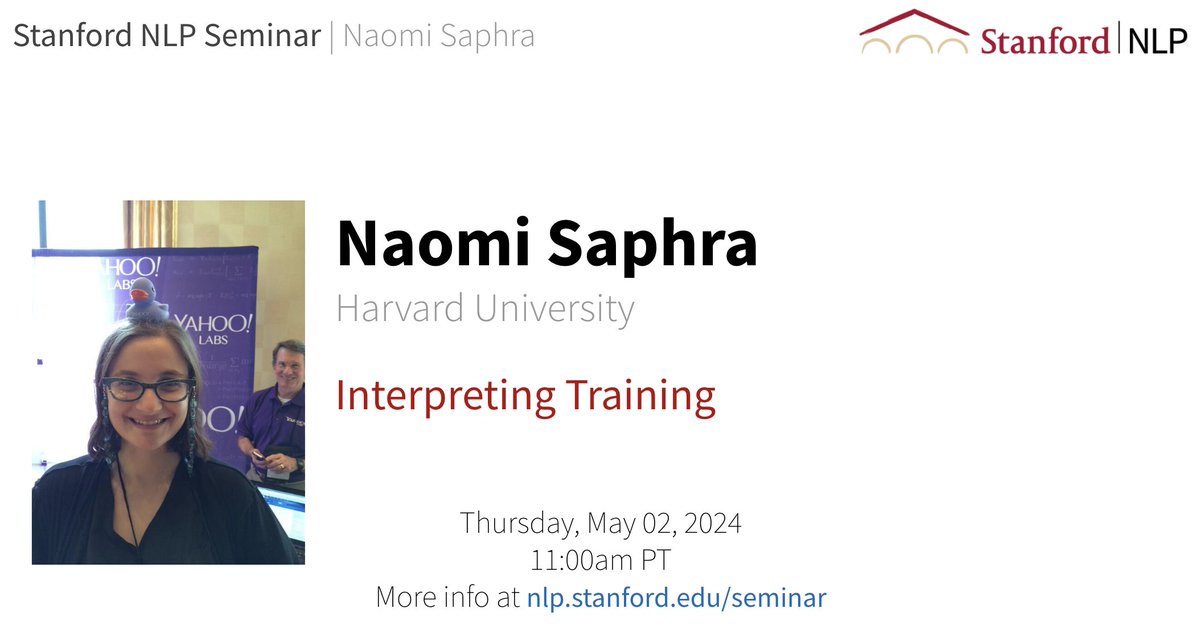

For this week’s NLP Seminar, we are thrilled to host Naomi Saphra to talk about 'Interpreting Training'!

When: 05/02 Thurs 11am PT

Non-Stanford affiliates registration form (closed at 9am PT on the talk day): forms.gle/XJUQQZTEn6QLqR…

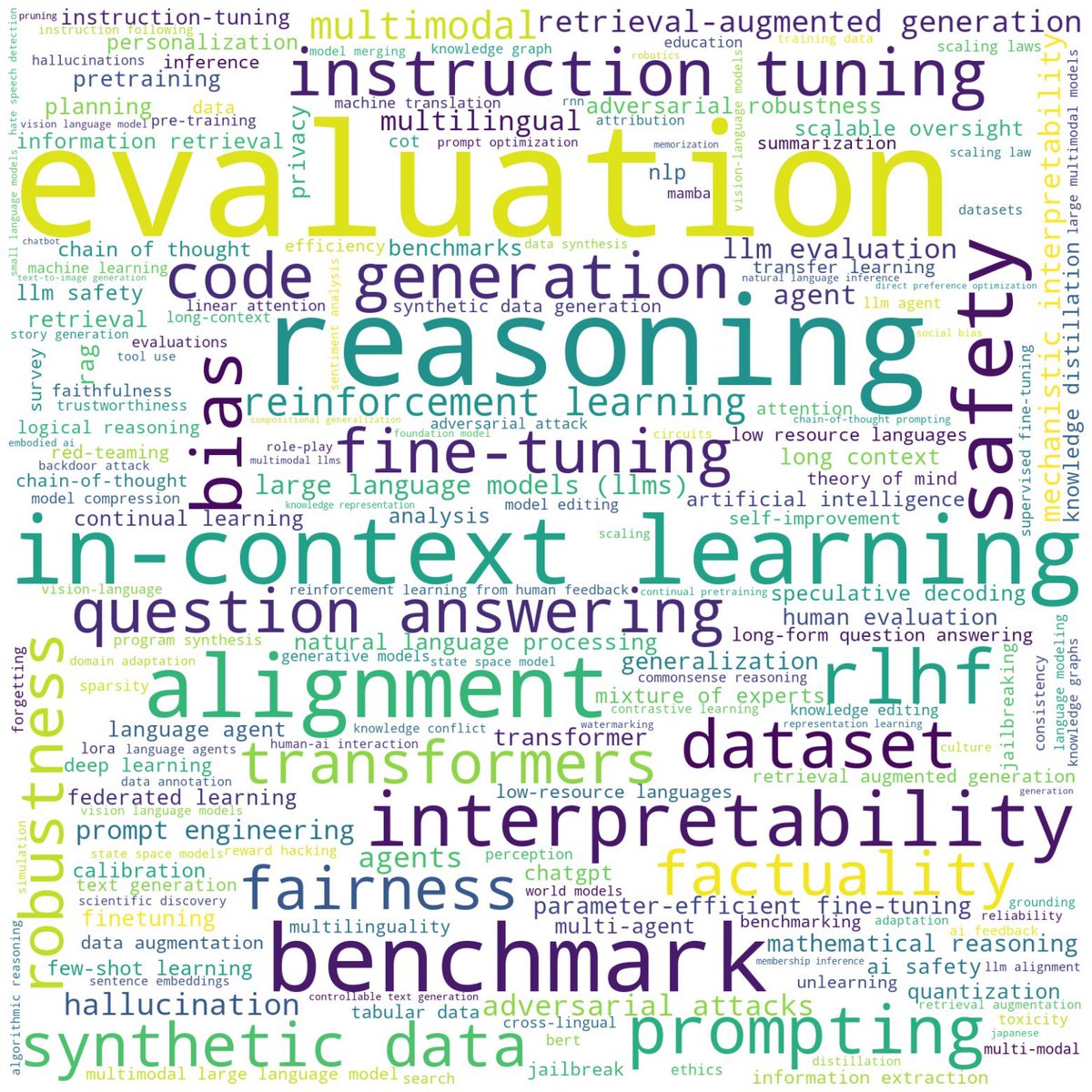

Conversations about #AI fairness and AI assistive technology need to include disabled people… #KempnerInstitute Research Fellow Naomi Saphra discusses fairness and disability in this important new article from the Harvard Gazette.

bit.ly/4aijrfB