Zhengxuan Wu

@zhengxuanzenwu

member of technical staff @stanfordnlp, goes by zen, life is neither wind nor rain, nor clear skies

ID: 1288758678141599744

https://nlp.stanford.edu/~wuzhengx/ 30-07-2020 08:50:01

330 Tweet

1,1K Followers

633 Following

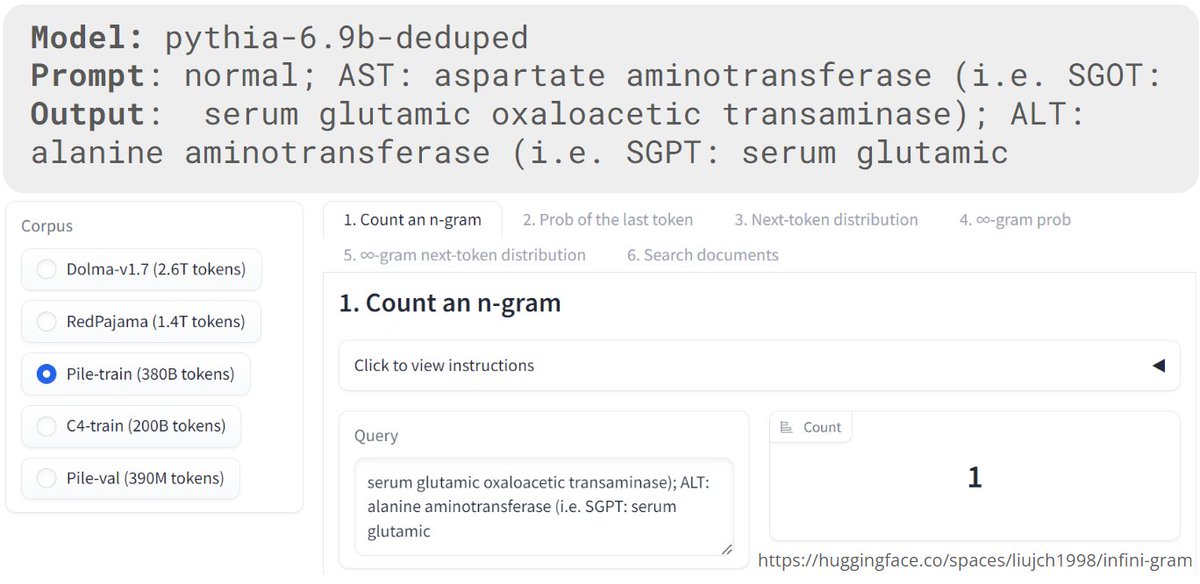

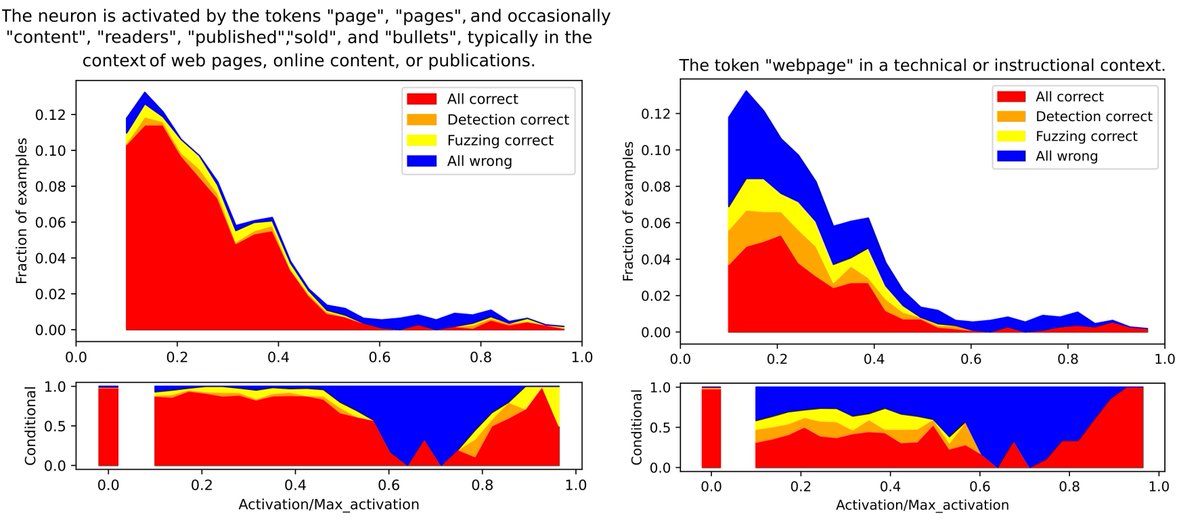

Sparse autoencoders recover a diversity of interpretable features but present an intractable problem of scale to human labelers. We build new automated pipelines to close the gap, scaling our understanding to GPT-2 and LLama-3 8b features. @goncaloSpaulo Jacob Drori Nora Belrose

Attending #ACL2024? Come hear about our recent work from TAU/Google (with collaborators!) about interpretability, knowledge, and reasoning in LLMs! Sohee Yang @ ACL 2024 Jesujoba Alabi Alon Jacovi Gal Yona Zhengxuan Wu

.Stanford NLP Group awards at #ACL2024 ▸ Best paper award Julie Kallini ✨ et al ▸ Outstanding paper award Aryaman Arora et al ▸ Outstanding paper award Weiyan Shi et al ▸ Best societal impact award Weiyan Shi et al ▸ 10 year test of time award Christopher Manning et al Congratulations! 🥂

The Linear Representation Hypothesis is now widely adopted despite its highly restrictive nature. Here, Csordás Róbert, Atticus Geiger, Christopher Manning & I present a counterexample to the LRH and argue for more expressive theories of interpretability: arxiv.org/abs/2408.10920

I am BEYOND EXCITED to publish our interview with Krista Opsahl-Ong (Krista Opsahl-Ong) from Stanford AI Lab! 🔥 Krista is the lead author of MIPRO, short for Multi-prompt Instruction Proposal Optimizer, and one of the leading developers and scientists behind DSPy! This was such

Should AI be aligned with human preferences, rewards, or utility functions? Excited to finally share a preprint that Micah Carroll Matija Franklin Hal Ashton & I have worked on for almost 2 years, arguing that AI alignment has to move beyond the preference-reward-utility nexus!

🚨 New paper alert! 🚨 SAEs 👾 are a hot topic in mechanistic interpretability 🛠️, but how well do they really work? We evaluated open-source SAEs of OpenAI , Apollo Research and by Joseph Bloom on GPT-2 small and found they struggle to disentangle knowledge compared to neurons.