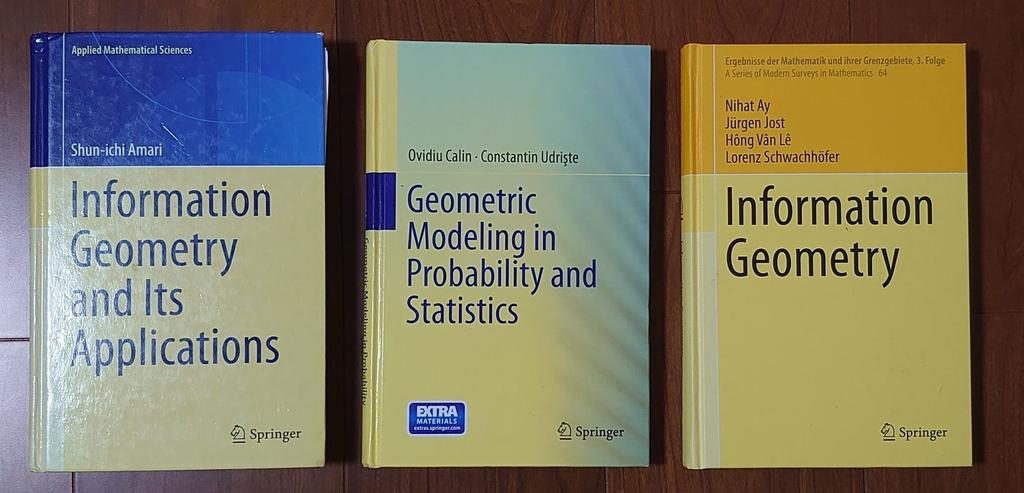

Frank Nielsen

@FrnkNlsn

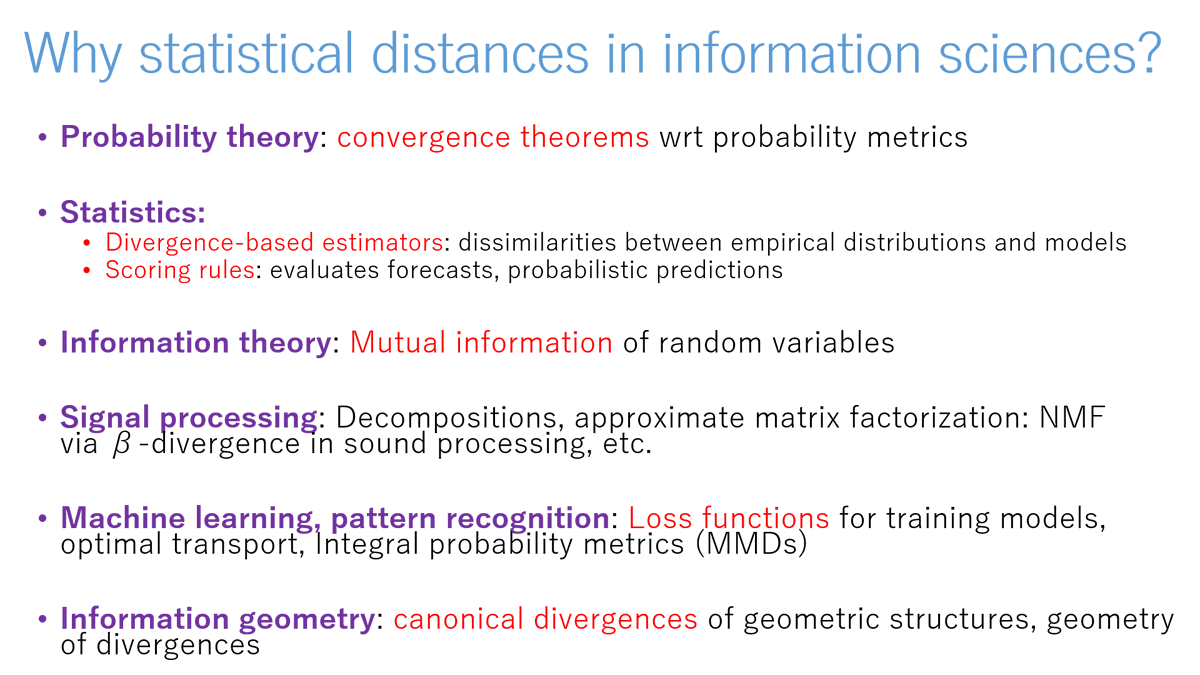

Machine Learning & AI, Information Sciences & Information Geometry, Distances & Statistical models, HPC.

"Geometry defines the architecture of spaces" @SonyCSL

ID:117258094

https://franknielsen.github.io/index.html 25-02-2010 01:38:54

5,2K Tweets

23,4K Followers

1,3K Following